“Destroys well paying jobs” so what, AI art is okay?

Over on Ars Technica, a couple of days ago, someone unironically made the point that AI art would improve society by forcing “starving artists” to become “sated burger flippers”.

How it started: “Advancements in technology will lead to a post-scarcity society where one is free to explore their interests.”

How it’s going: “Advancements in technology will allow computers to automate creativity freeing up time for artists to participate in menial labor.”

Ars technica comments consistently seem to have the worst takes on ai art I’ve ever seen, it’s nuts

their shit comments have somehow also leaked onto mastodon. if you need a reply guy blocking honeypot, consider the replies to any ars technica article that gets reposted on their official mastodon account

deleted by creator

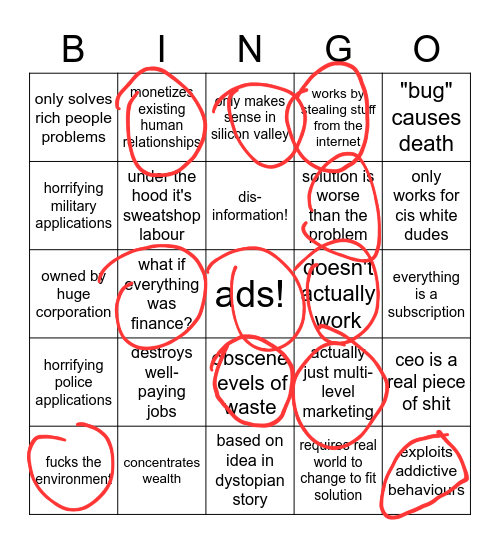

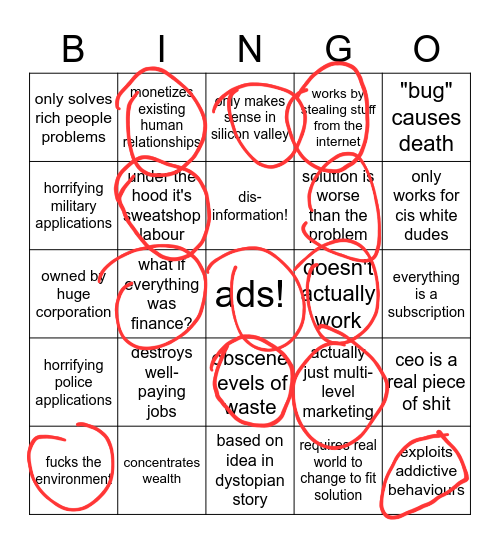

NFTs

you could add monetizes existing human relationships as well for the meme NFTs. And obscene levels of waste considering it uses blockchain and cryptocurrency

Done, didn’t see them.

under the hood they were also sweatshop labour

Done

@kuna Agree with all but one: i’m completely fine with „destroys well paying jobs”. Many good inventions (like democracy, writing, printing press or computers or bridges) did that, and we were fine. Actually can we get rid of landlords, lawyers, soldiers, brokers, marketing people and billionaires?

What well paying job do you think democracy, writing, and bridges destroyed?

Kings, bards, and ferry rowers?

I’m curious how many “revolutionary new technologies” only work for cis white dudes. Like maybe it’s just my privilege speaking, but there has to be a pretty narrow field where that’s even relevant, right?

Does it apply to much more than just AIs trying to recognize or replicate human appearances?

AI resume sorting has had this problem as well.

There’s also the “unmoderated free speech zone” websites.

Other weirder shit happens too, for example my hearing aids seem to be less able to recognize that the voices of black people, especially black men, are people speaking to me. That’s less Silicon Valley to my knowledge but modern hearing aids are increasingly high tech and clearly are struggling with similar biases that other tech is.

There’s also a lot of issues with security technology like what you’ll find in airports and medical technology that isn’t prepared for non cis endosex bodies. If you have breasts and a penis you’re gonna get groped by the tsa because the machine thinks one of those is you hiding something. Heck medical technology often isn’t prepared for women or people of color either.

This is definitely one of the less prevalent squares on the board but tech definitely does have a bit of a naïveté to it where they don’t think about how things like medical and search history could be used by the government against users or how anyone able to say anything they want or find anyone they want could go disastrously for some people.

deleted by creator

deleted by creator

Anything that evaluates people, regardless of whether it sees the face. Hiring AI has a racist bent because there does not exist non-racist training data. I think it was Amazon who kept trying to fix this but when after scrubbing names and pictures it was still an issue.

That one is weird to me. Was it somehow extrapolating the race? Or was it favoring colleges with racist admissions? Not really sure how else it could get racist without access to names and pictures.

There are many indicators of race in hiring. For example, these characteristics could give a pretty good idea of the race of an applicant

- Name

- College or university

- Clubs or fraternities

- Location (address, not city)

While an ML program wouldn’t decide “don’t hire black men”, it may be reinforced by existing policies that are racist. Since these programs are trained on the existing success of employees at a company.

For example, a company may not have hired someone from an HBCU. Therefore, an applicant who attended an HBCU would be viewed negatively by the hiring program.

Name’s being scrubbed, but the rest makes sense. Thanks!

anything you might see in say weapons of math destruction. of course every technology was new once so you could also look at things like the many descendants of phrenology (take your favorite intelligence test) or various pain scales

Pain scales?

by that I mean various methods of talking to or examining people and determining how much pain they’re in. in principle there is no reason pain scales ought not to work for women and black people. but in fact they don’t, because the tests don’t exist in a vacuum. health care practitioners interpret them in a staggeringly prejudiced way, no matter how sophisticated and evidence based the tests are

EDIT: To give a more specific description - there was and is a widespread belief among doctors that black people feel less pain than white people because they have “less sensitive nerve endings.” this isn’t evidence based. black people get less pain treatment in comparable situations than white people do.

I don’t know if there is any myth as particular as the one above about women, but women also get less pain treatment than men. iud insertion and endometriosis especially can be brutal experiences for women because doctors don’t take the associated pain seriously.

@kuna I like the irony of it being a webp.

New product/service bingo?

Looks like it! We oughta play it.

Zuck’s Metaverse somehow doesn’t get a bingo. Mostly because the fourth row only ticks the righthand column. Otherwise nearly 50-50.

Everyone, including Zuck, is hoping we all just forget about the “Metaverse”.

How about this row?

- owned by a huge corporation

- what if everything was finance (maybe a stretch?)

- ads!

doesn’t actually workthey have legs now- everything is a subscription

It was just Second Life for corporations.

Posted on a tech platform.

Posted on an open tech platform

looking at his other comments, the gentleman has been escorted to the egress