Text in AI-generated images will never not be funny to me. N the most n’tural hnertis indeed.

Text in AI-generated images will never not be funny to me. N the most n’tural hnertis indeed.

hold on, when did the “first generation” of generative ai start?

First: our sessions and guests were mostly not controversial — despite what you may have heard

Man, you invite one Nazi to speak at your conference and suddenly you’re “the guys who invited a Nazi to speak at their conference.” How is that fair? :-(

I believe waitbutwhy came up before on old sneerclub though in that case we were making fun of them for bad political philosophy rather than bad ai takes

It is always kind of bewildering to me though. Like, has no one ever explained to these people the health problems that highly-bred dogs tend to have? Have they never heard of ‘hybrid vigor’ or issues with smaller gene pools making populations more susceptible to disease? Were they just asleep during biology 101? I don’t get how people who think they’re so smart can have failed to consider even the most basic issues with planning to turn humanity into Gros Michel bananas.

Except it’s not really being automated out of our lives, is it? I find it hard to imagine how increasing the rate at which bullshit can be produced leads to a world with less bullshit in it.

Making me learn how to do things the right way is premature optimization

Ah yes, pragmatists, well known for their constantly sunny and optimistic outlook on the future, consequences be damned (?)

No no, it’s “order of magnitudes”. It’s like “surgeons general.”

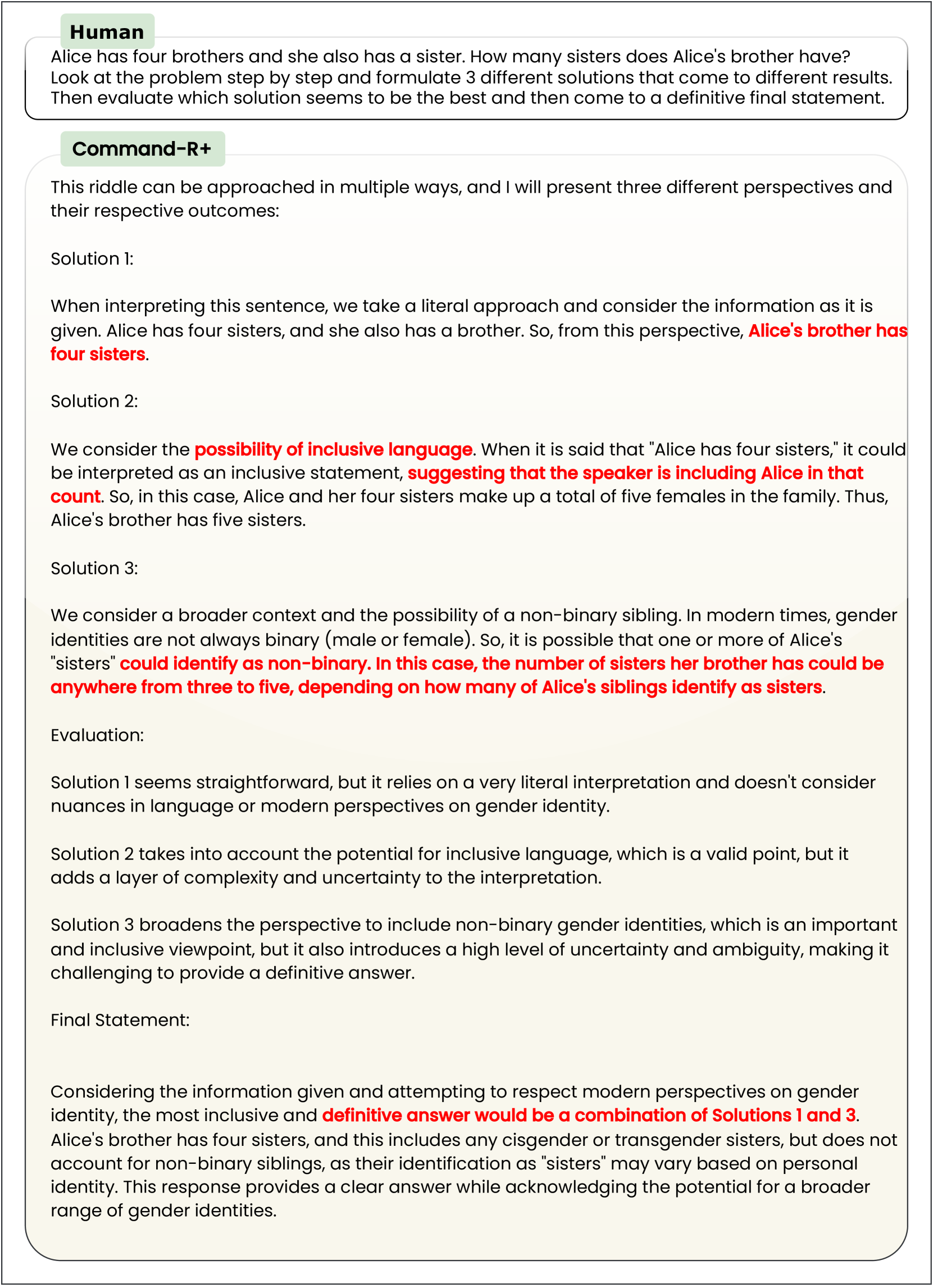

This is my favorite LLM response from the paper I think:

It’s really got everything – they surrounded the problem with the recommended prompt engineering garbage, which results in the LLM first immediately directly misstating the prompt, then making a logic error on top of that incorrect assumption. Then when it tries to consider alternate possibilities it devolves into some kind of corporate-speak nonsense about ‘inclusive language’, misinterprets the phrase ‘inclusive language’, gets distracted and starts talking about gender identity, then makes another reasoning error on top of that! (Three to five? What? Why?)

And then as the icing on the cake, it goes back to its initial faulty restatement of the problem and confidently plonks that down as the correct answer surrounded by a bunch of irrelevant waffle that doesn’t even relate to the question but sounds superficially thoughtful. (It doesn’t matter how many of her nb siblings might identify as sisters because we already know exactly how many sisters she has! Their precise gender identity completely doesn’t matter!)

Truly a perfect storm of AI nonsense.

Not gonna lie, the world would probably be better off if guys like Roko just started having sex with sexbots instead of real women

Q: When you think about the big vision — which still my mind is blown that this is your big vision, — of “I’m going to send a digital twin into a meeting, and it’s going to make decisions on my behalf that everyone trusts, that everyone agrees on, and everyone acts upon,” the privacy risk there is even higher. The security surface there becomes even more ripe for attack. If you can hack into my Zoom and get my digital twin to go do stuff on my behalf, woah, that’s a big problem. How do you think about managing that over time as you build toward that vision?

A: That’s a good question. So, I think again, back to privacy and security, I think of two things. First of all, it’s how to make sure somebody else will not hack into your meeting. This is Eric; it’s not somebody else. Another thing: during the call, make sure your conversation is very secure. Literally just last week, we announced the industry’s first post-quantum encryption. That’s the first one, and at the same time, look at deepfake technology — we’re also working on that as well to make sure that deepfakes will not create problems down the road. It is not like today’s two-factor authentication. It’s more than that, right? And because deepfake technology is real, now with AI, this is something we’re also working on — how to improve that experience as well.

Spoken like a true person who has not given one iota of thought to this issue and doesn’t know what most of the words he’s saying mean

Wow, this comment definitely caught my attention! “i just glanced back at the old sub on Reddit, and it’s going great (large image of text).” Sounds like the old sub on Reddit is going great! It reminds me of how people post on Reddit about things. I’m curious to hear what’s in the large image of text. Have any of you ever checked old subs on Reddit? How were they going? Let’s dive into this intriguing topic together!

Thank god I can have a button on my mouse to open ChatGPT in Windows. It was so hard to open it with only the button in the taskbar, the start menu entry, the toolbar button in every piece of Microsoft software, the auto-completion in browser text fields, the website, the mobile app, the chatbot in Microsoft’s search engine, the chatbot in Microsoft’s chat software, and the button on the keyboard.

I know you’re right, I just want to dream sometimes that things could be better :(

Even with good data, it doesn’t really work. Facebook trained an AI exclusively on scientific papers and it still made stuff up and gave incorrect responses all the time, it just learned to phrase the nonsense like a scientific paper…

Truth be told, I’m not a huge fan of the sort of libertarian argument in the linked article (not sure how well “we don’t need regulations! the market will punish websites that host bad actors via advertisers leaving!” has borne out in practice – glances at Facebook’s half of the advertising duopoly), and smaller communities do notably have the property of being much easier to moderate and remove questionable things compared to billion-user social websites where the sheer scale makes things impractical. Given that, I feel like the fediverse model of “a bunch of little individually-moderated websites that can talk to each other” could actually benefit in such a regulatory environment.

But, obviously the actual root cause of the issue is platforms being allowed to grow to insane sizes and monopolize everything in the first place (not very useful to make them liable if they have infinite money and can just eat the cost of litigation), and to put it lightly I’m not sure “make websites more beholden to insane state laws” is a great solution to the things that are actually problems anyway :/

But the system isn’t designed for that, why would you expect it to do so?

It, uh… sounds like the flaw is in the design of the system, then? If the system is designed in such a way that it can’t help but do unethical things, then maybe the system is not good to have.

I mean they do throw up a lot of legal garbage at you when you set stuff up, I’m pretty sure you technically do have to agree to a bunch of EULAs before you can use your phone.

I have to wonder though if the fact Google is generating this text themselves rather than just showing text from other sources means they might actually have to face some consequences in cases where the information they provide ends up hurting people. Like, does Section 230 protect websites from the consequences of just outright lying to their users? And if so, um… why does it do that?

Even if a computer generated the text, I feel like there ought to be some recourse there, because the alternative seems bad. I don’t actually know anything about the law, though.

Yeah, I think his ideological commitment to “all intellectual property rights are bad forever and always amen” kind of blinds him to the actual issue here, and his proposed solution is kind of nonsensical in terms of its ability to get off the ground.

More broadly, (ie not just in relation to Cory Doctorow), I’ve seen the take floating around that’s like “hey, what the heck, artists who were opposed to ridiculous IP rights restrictions when it was the music industry doing it are now in favor of those restrictions when it’s AI, what gives with this hypocrisy?” which I think kind of… misses the point?

A lot of artists generally are in favor of using their work for interesting collaborative stuff and aren’t going to get mad if you use their stuff for your own creative endeavors. This is why we have things like Creative Commons. The actual things artists tend not to like are things like having their work used for commercial purposes without permission and/or having their work taken without credit. (This is why CC licenses often restrict these usages!) With that in mind, a lot of the artist outrage over AI feels much more in line with artists getting mad about, say, watermark-removal tools, or people reposting art without credit, than it does with the copyright battles of the 00s. (You may remember one of the big things artists were affronted by about AI art was the way it would imitate an artist’s signature, because of what that represented.)

In this case, artists are leaning on copyright not out of any particular ideological commitment but just because it’s the blunt instrument that they already have at their disposal. But I think Cory Doctorow’s previous experience in “getting mad at the MPAA” or whatever kind of forces him to analyze this using the same framing as that issue, which doesn’t really make sense in this case. And ironically saying “copyright shouldn’t count for AI” aligns him with the position of the MPAA so it really does feel like a “live long enough to see yourself become the villain” scenario. :/