I found this searching for information on how to program for the old Commodore Amiga’s HAM (Hold And Modify) video mode and you gotta touch and feel this one to sneer at it, cause I haven’t seen a website this aggressively shitty since Flash died. the content isn’t even worth quoting as it’s just LLM-generated bullshit meant to SEO this shit site into the top result for an existing term (which worked), but just clicking around and scrolling on this site will expose you to an incredible density of laggy, broken full screen animations that take way too long to complete and block reading content until they’re done, alongside a long list of other good design sense violations (find your favorites!)

bonus sneer

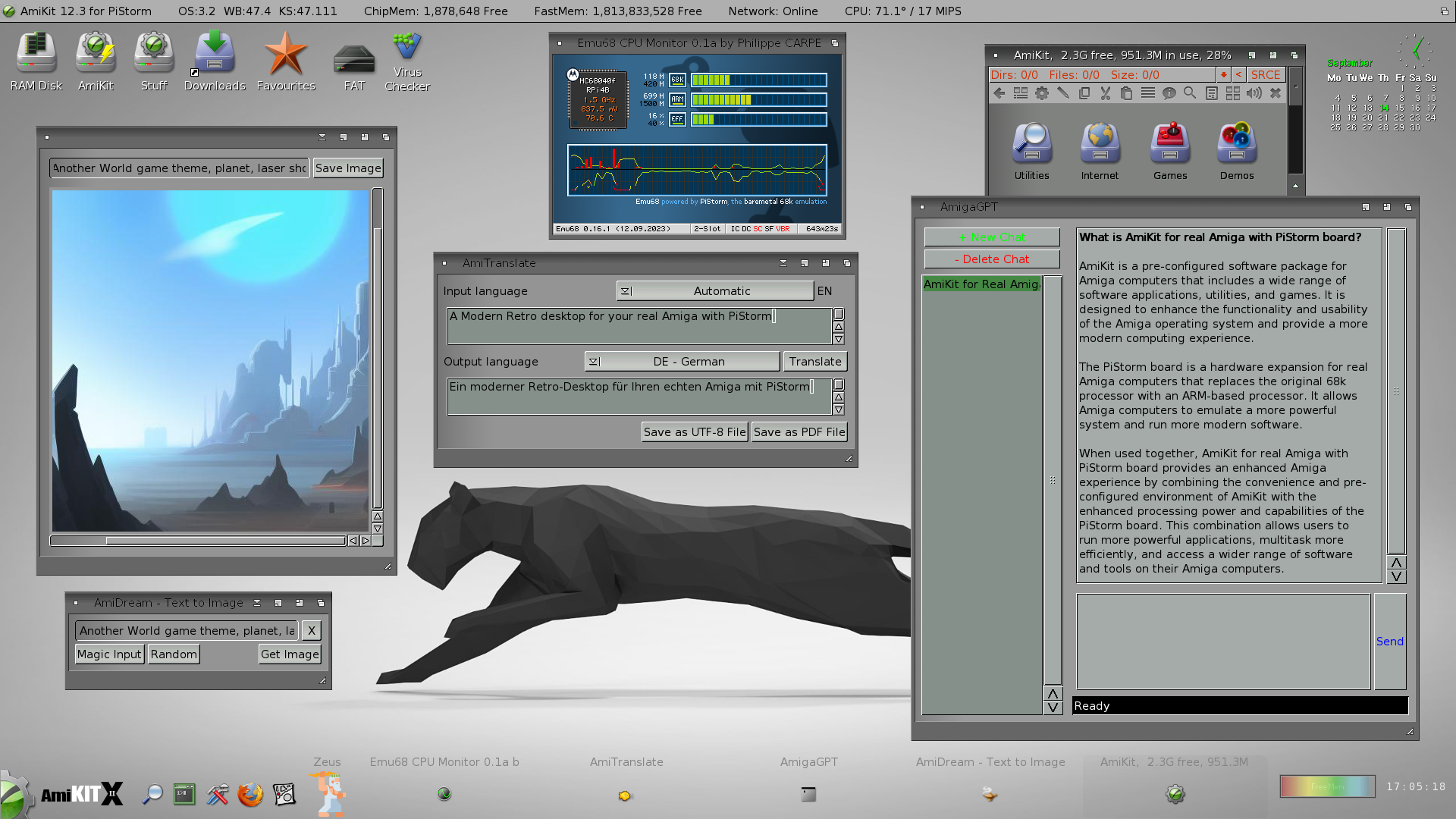

arguably I’m finally taking up Amiga programming as an escape from all this AI bullshit. well fuck me I guess cause here’s one of the vultures in the retrocomputing space selling an enshittified (and very ugly) version of AmigaOS with a ChatGPT app and an AI art generator, cause not even operating on a 30 year old computer will spare me this bullshit:

like fuck man, all I want to do is trick a video chipset from 1985 into making pretty colors. am I seriously gonna have to barge screaming into another German demoscene IRC channel?

I was just opening pouet to pull up this year’s revision entries

if you look at the specs of it, it’s absolutely astounding what sceners produce on the amiga

edit: this made me think of something, so I posted about it

fuck yeah! an OCS Amiga runs on a ~7MHz 68k CPU (the basic one with no cache or FPU), usually 512k of RAM (modern demos and games often grab a luxurious full 1MB cause a lot of Amigas had that RAM upgrade back then) and a single 880k floppy drive with no other permanent storage. but all the rest of the chips in the system run as concurrently as possible, in a way that feels like having a primitive GPU but with a lot more control over what its individual components do